For the past forty years, companies have been building all their financial and business decisions around information contained in traditional data structures. Virtually all financial systems, no matter who has designed them, have been built around relational databases. This brings the same set of issues and challenges to every single system.

The primary limiting factor with every relational database is a relatively finite limit as to how much data it can contain without compromising performance. In most companies, these finite relational database limits have already been outstripped by the flood of new data that is constantly being created. In response, virtually all companies have resorted to segmentation of their information into different standalone databases for faster manipulation and management.

For example, the General Ledger is often separated from sub-ledgers and other critical functions such as profitability analysis, revenue recognition and management reporting are relegated to standalone programs and dedicated servers.

Although the goal of segmentation is to achieve faster performance from each database, every time a function or data set is handled separately, informational gaps are created. This sets in motion a myriad of problems from different understandings of what is supposedly the same information based on where and how the data is stored. The result is a need for constant reconciliation of data drawn from different databases and the imposition of rigid “data cleansing” processes before any decisions can be made.

Of course, these interim steps to cleanse and validate the data are cumbersome, time consuming and a big drain on productivity. Managers can sometimes spend much more time debating the accuracy of each other’s data than they actually spend on making decisions.

In recent years, thought leaders have been grappling with the above issues in an effort to find effective ways of unifying these separate data repositories with the core processing of transactions. Overall goals have been to increase accuracy, efficiency and performance by eliminating the need to massage, analyze, reconcile and move data before acting on it, while at the same time accelerating transaction speed. Steady improvements in chip performance, memory speeds and in-memory software architectures have now made those goals achievable.

By leveraging the in-memory data and processing capabilities of the SAP S/4HANA architecture and S/4HANA Cloud deployment scenarios, CFOs can seamlessly unify their information landscape to remove the gaps and ease the pain-points arising from the artificial segmentation of information.

Instead of always grappling with reassembling disparate pieces of the picture this approach enables CFOs and staff throughout the company to see a holistic real-time view that encompasses all operational data sets and analysis capabilities within a single unified architecture.

In addition to improving both the access to and the ability to manipulate information, S/4HANA also dramatically improves real-time analytics performance because nothing must be moved, massaged or reconciled before the analysis.

With S/4 HANA you now have access to all the data in real time. So how does this change the paradigm for how we approach data? In the past we struggled to get data. You had to abstract it, put it into files and then use what you had. I know that as a user of data we had to think long and hard about what data we needed to answer specific questions.

The heart of this new unified approach is the Universal Journal, which now enables the long-sought after "Single-Source-of-Truth" for decision making. It embodies the concept that all the data is unified within an unsegmented database so that all processes and people see the same set of information in real time.

This is possible because S/4HANA offers three transformative innovations:

- Enables a 7 to 10 times compression of data to greatly shrink the data footprint

- Takes the data off disk and puts it into main memory so it’s immediately available

- Makes real-time information available to front-line users via flexible S/4HANA deployment scenarios and personalized User Interface technologies

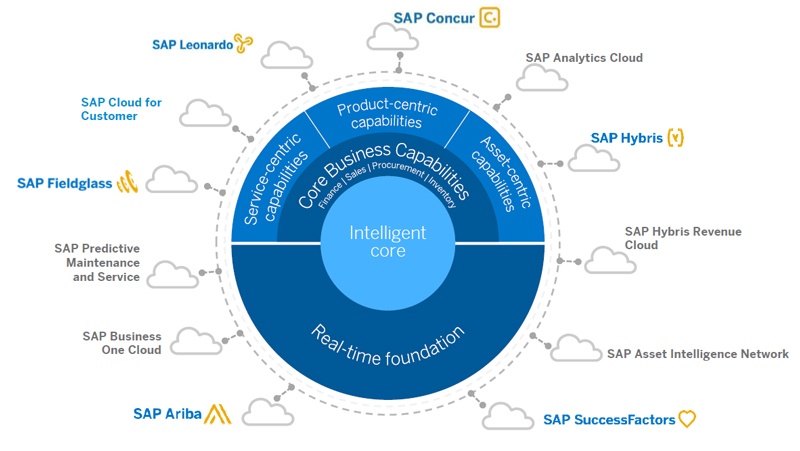

Leveraging S/4HANA has enabled a complete re-imagining of the whole financial architecture and structure of applications so that everything can be driven in real time from the single SAP Intelligent Digital Core.

Once everyone is working with the same information and operating in sync with each other, the single-source-of-truth also begins to inform high-level strategies and long-term planning processes. Instead of constantly struggling to understand where you are, the whole team can turn their focus and energies toward getting where you want to be.

Now that we have instant access to raw transaction level data, and are constantly adding more data with technologies such as Internet of Things (IoT) and machine learning, companies need partners, such as Bramasol, who are experts in deploying solutions with the agility and scalability to handle escalating data and transaction processing requirements.

Bramasol is a co-innovation leader in the implementation of the SAP S/4HANA (On-Premise and Cloud) to help customers achieve high-performance results with in-memory capabilities and extensible Digital Core.

Our capabilities include leveraging S/4HANA technology in purpose-built offerings such as our Rapid RevRecReady Compliance Solution and Rapid Leasing Compliance Solution as well as helping our customers incorporate S/4HANA benefits into their on-going Finance Innovation initiatives.

Click here to request a demo of S/$HANA Cloud and get expert answers to your specific needs.